This list is not exhaustive. For example, lists multiple distance and similarity measures for different kinds of data: numerical (12), boolean (8), string (5), images & color (2), geospatial & temporal (4), and general & mixed (1).

Given the multiple options, I think it’s more useful to know when to apply a specific similarity measure. The exact definitions themselves are not as important as we can look them up if need be, but the rationales are helpful in figuring out what to consider. The rationales can be gleaned from inspecting the formulas, e.g. .

Note: similarity indexes may not specify the 4 NIST specifications of a similarity metric : non-negativity, identity, symmetry, and subaddivity.

Definitions of \(CP\) and \(CA\)

| \(f_1\) | \(f_2\) | \(f_3\) | \(f_4\) | \(f_5\) | |

|---|---|---|---|---|---|

| d | 0 | 1 | 1 | 0 | 1 |

| q | 0 | 0 | 1 | 1 | 1 |

\(CP(q, d)\), the number of the co-presences of binary features, is \(2\) because of \(f_3\) and \(f_4\).

\(CA(q, d)\), the number of co-absences, is \(1\) because of \(f_1\).

Russell-Rao

Kinda confusing for \(q\) to mean query in \(f(q, d)\), and something else in \(|q|\).

Where \(|q|\) is the total number of binary features considered:

$$ f(q, d) = \frac{ CP(q, d) }{ |q| } $$

Jaccard is more popular than Russell-Rao because datasets tend to be sparse that \(|q|\) ends up dominating.

I keep seeing references to “dissimilarity measures” and not much of “similarity measures”. Not sure why a difference in nomenclature exists.

For instance, for measure \(m = F(a, b)\), it’s not as if when \(a\) and

\(b\) are similar, then \(m \to 0\) for a dissimilarity measure \(F\),

while \(m > 0\) (usually \(m \to 1\)) for a similarity measure \(F\).

defines both, illustrating a difference. For example, the Russell-Rao similarity measure is \(\frac{S_{11}}{N}\), while the dissimilarity measure is \(\frac{N - S_{11}}{N}\). \(S_{11}\) is the number of occurrences of matches with ones in corresponding locations.

Sokal-Michener

$$ f(q, d) = \frac{ CP(q, d) + CA(q, d) }{ |q| } $$

Useful for medical diagnoses.

Jaccard Index

$$ f(q, d) = \frac{ CP(q, d) }{ CP(q, d) + PA(q, d) + AP(q, d) } $$

Useful when the data is sparse, and co-absences are not important, e.g. a retailer’s list of customer-items.

Cosine Similarity

$$ f(q, d) = \frac{ \sum_{i=1}^{m} (q_i \cdot d_i) }{ \sqrt{ \sum_{i=1}^{m} q_i^2 } \times \sqrt{ \sum_{i=1}^{m} d_i^2 } } = \frac{\vec{q} \cdot \vec{d}}{ |\vec{q}| \times |\vec{b}| } $$

The result ranges from \(0\) (dissimilar) to \(1\) (similar).

The measure only cares about the angle between the two vectors. The magnitudes of the vectors are inconsequential.

Sanity check: say we have \(q = (7, 7)\) and \(d = (2, 2)\):

$$ f(q, d) = \frac{ 7 \cdot 2 + 7 \cdot 2 }{ \sqrt{7^2 + 7^2} \times \sqrt{ 2^2 + 2^2 }} = 1 $$

Looks like cosine similarity is a trend-finder. I’m uncomfortable that \((2, 2)\) is considered to be as close to \( (1, 1)\) as it is to \( (7, 7) \).

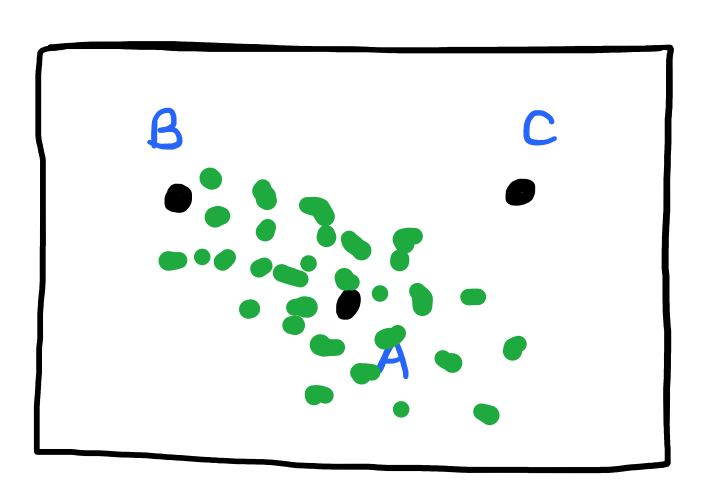

Mahalanobis Distance

$$ f\left(\vec{a}, \vec{b}\right) = \begin{bmatrix} a_1 - b_1 & … & a_m - b_m \end{bmatrix} \times \Sigma^{-1} \times \begin{bmatrix}a_1 - b_1 \\ … \\ a_m - b_m \end{bmatrix} $$

The Mahalanobis distance scales up the distances along the direction(s) where the dataset is tightly packed.

The inverse covariance matrix, \( \Sigma^{-1} \), rescales the differences so that all features have unit variance and removes the effects of covariance.

References

- Distance and Similarity Measures. reference.wolfram.com . Accessed Oct 27, 2021.

- Properties of Binary Vector Dissimilarity Measures. Bin Zhang; Sargur N. Srihari. JCIS International Conf. Computer Vision, Pattern Recognition, and Image Processing, Vol. 1, 2003. cedar.buffalo.edu . 2003.

- Similarity Coefficients for binary data: Why choose Jaccard over Russell and Rao? stats.stackexchange.com . Accessed Oct 28, 2021.

- Understanding the different types of variable in statistics > Categorical and Continuous Variables. statistics.laerd.com . Accessed Oct 28, 2021.

Nominal variables are variables that have two or more categories, but which do not have an intrinsic order. Dichotomous variables are nominal variables which have only two categories.

Dichotomous attributes (e.g. yes-or-no) are distinct from binary attributes (present vs. absent), e.g. binary attributes may be asymmetric in that co-presence suggests similarity, but co-absence may or may not be considered evidence of similarity. .