Copilot-Powered Scenarios

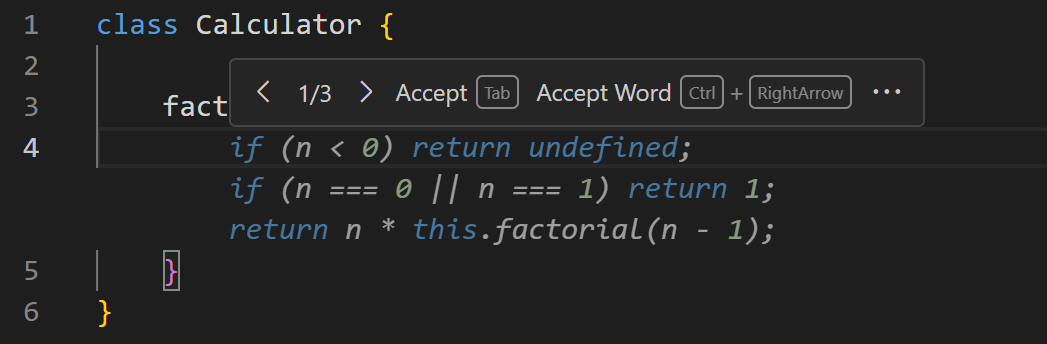

AI Code Completions

I’ve found code completions more distracting than useful. Probably because I already have an idea of what I want to type, and Copilot’s hallucinations slow me down. I’ve turned this off in my IDE.

Start typing in the editor, and Copilot provides code suggestions. Can accept either the next word or line. Credits: code.visualstudio.com.

“Completions” refers to the LLM “completing the statistical probability model of a response”. “Completion” models finish whatever input was provided, but they’re largely extinct. Chat Markup Language (ChatML) is now the dominant paradigm, where there is a system message, and a sequence of user and assistant messages, e.g.,

<|im_start|>system

Assistant is an intelligent chatbot designed to help users answer their tax

related questions.

Instructions:

- Only answer questions related to taxes.

- If you're unsure of an answer, you can say "I don't know" or "I'm not sure"

and recommend users go to the IRS website for more information.

<|im_end|>

<|im_start|>user

When are my taxes due?

<|im_end|>

<|im_start|>assistant

LLMs now frame the input inside a ChatML structure, and then respond. It’s not as simple as completing the input. The more accurate term for this is “responses” and not “completions”.

The tab-to-accept UI provides a good avenue for collecting metrics. For instance, what portion of suggestions were accepted by developers? Are some models noticeably better than others?

Where can I find this data? Presumably, VS Code guards it, and doesn’t make it publicly available.

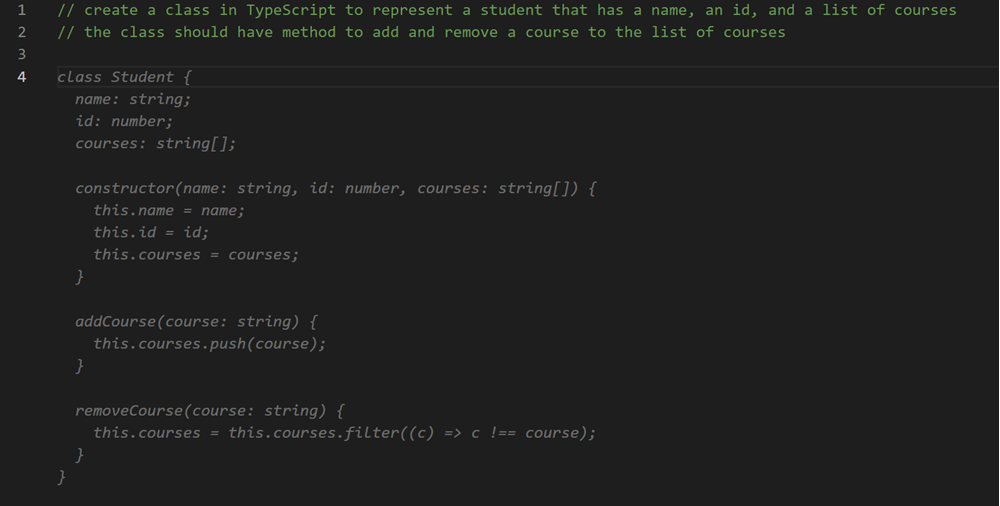

You can use code comments to hint what code Copilot should generate. I find the inline-chat UX more ergonomic because I do not need to come back and delete comments that simply rehash the code. Credits: code.visualstudio.com

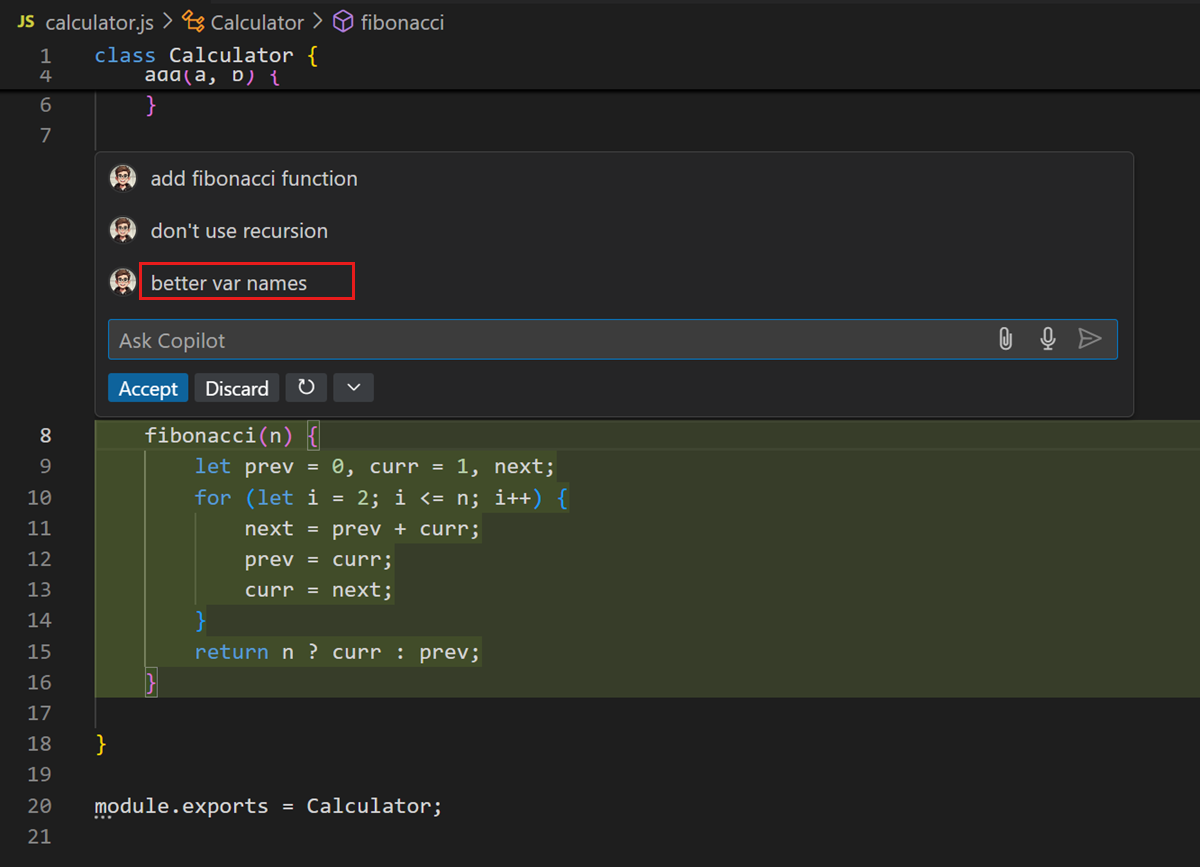

With inline chat, you can iterate with multiple prompts before acccepting a suggestion. Credits: code.visualstudio.com

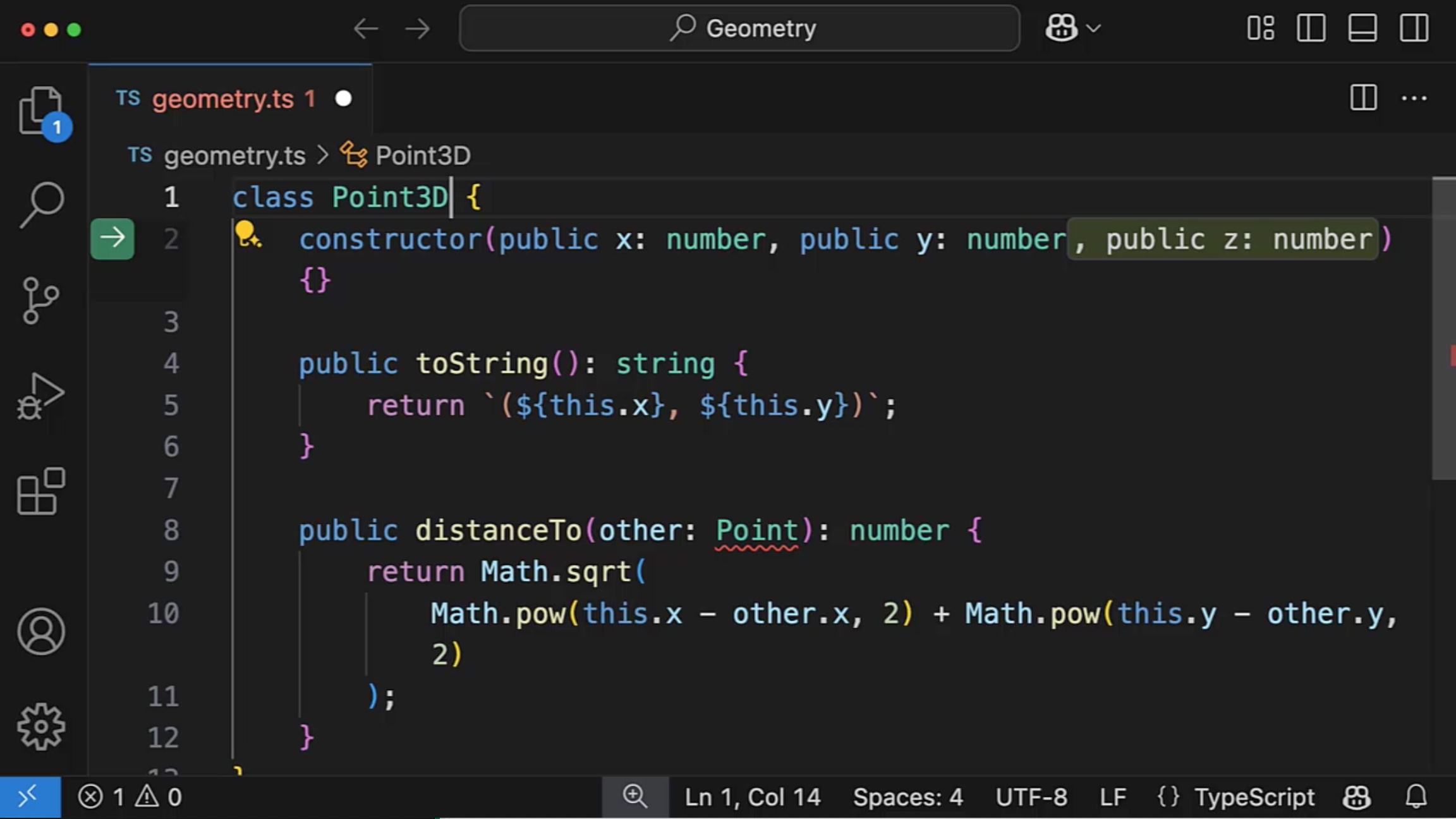

Next Edit Suggestions capture logical flows of code edits. For example, changing the class name from Point to Point3D makes Copilot predict that a z coordinate needs to be added, the toString() and distanceTo() methods need to updated, and so forth. Credits: code.visualstudio.com.

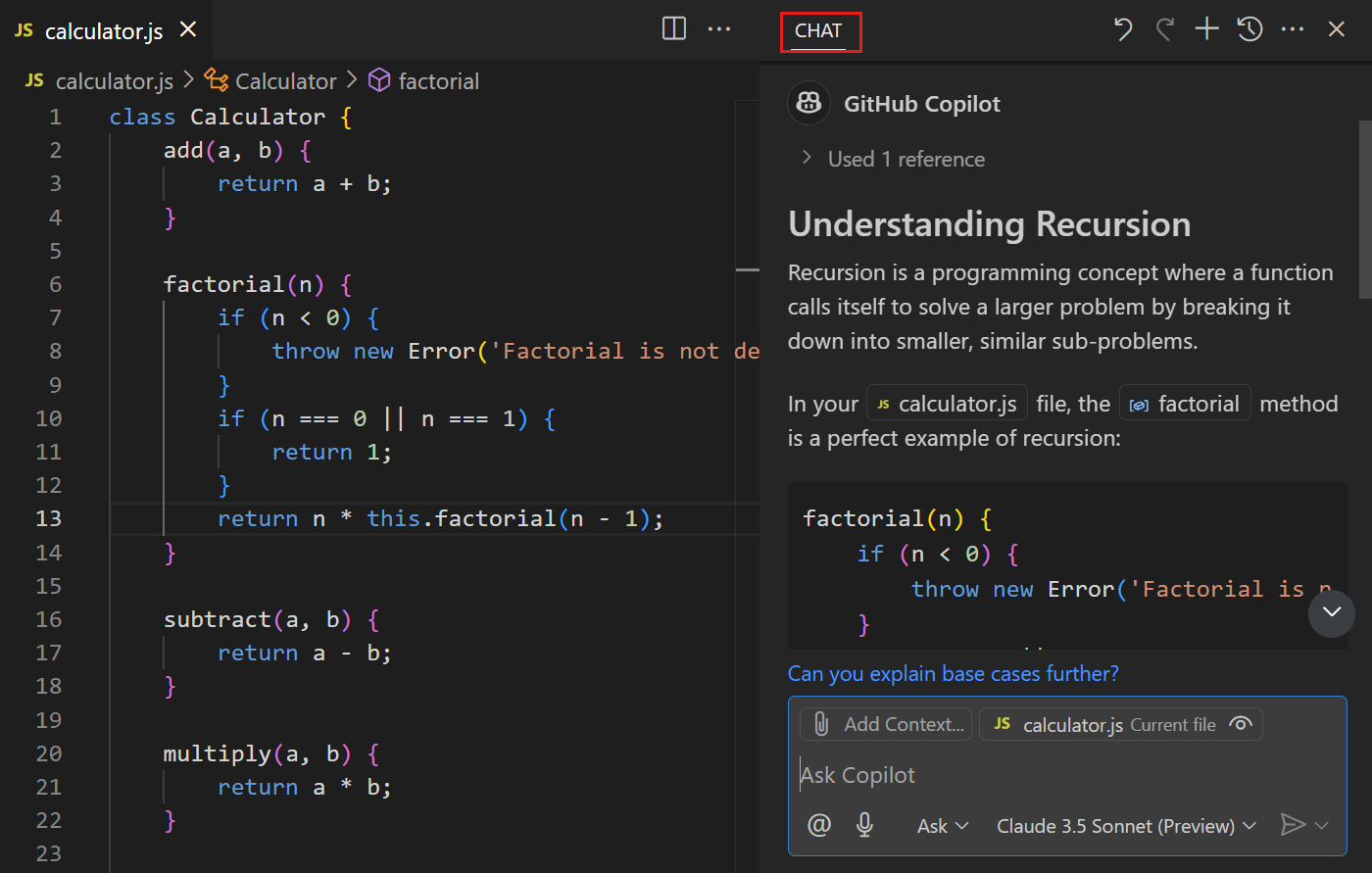

Natural Language Chat

Chat view in VS Code. Other UI surfaces include editor inline chat, terminal inline chat, and quick chat. Credits: code.visualstudio.com.

The Chat View is designed to be a multi-turn conversation, where the history of the conversation is used as context for the current prompt. You don’t need to repeat the context when asking follow-up questions.

Chat has different modes:

- Ask: Understand how a piece of code works, brainstorm design ideas, etc.

- Edit: Apply edits across multiple files, e.g., implementing a new feature, fixing a bug, refactoring code.

- Agent: Autonomously implement high-level requirements with minimal guidance, invoking tools for specialized tasks, iterating to resolve issues.

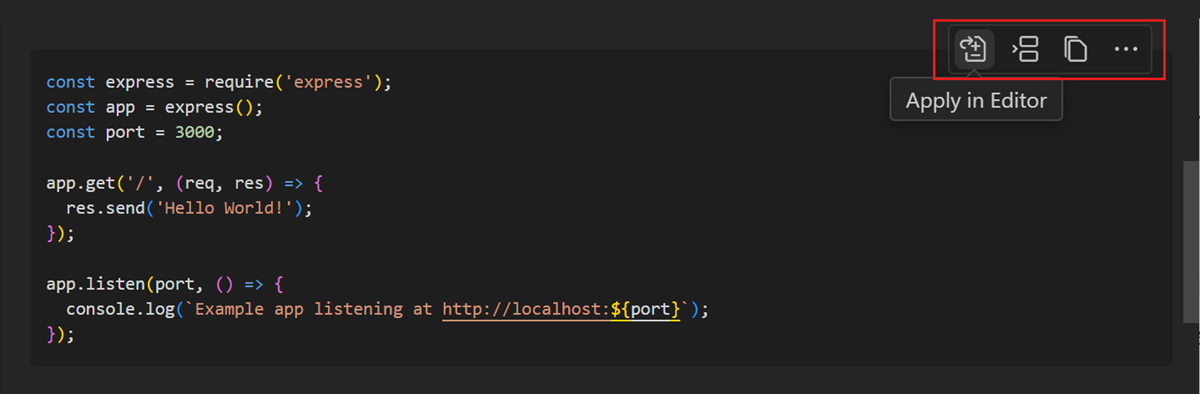

The response from chat mode can contain code blocks (or even scaffolding for generating a new workspace) that can be applied to your codebase. Ask mode does not imply read-only. Credits: code.visualstudio.com.

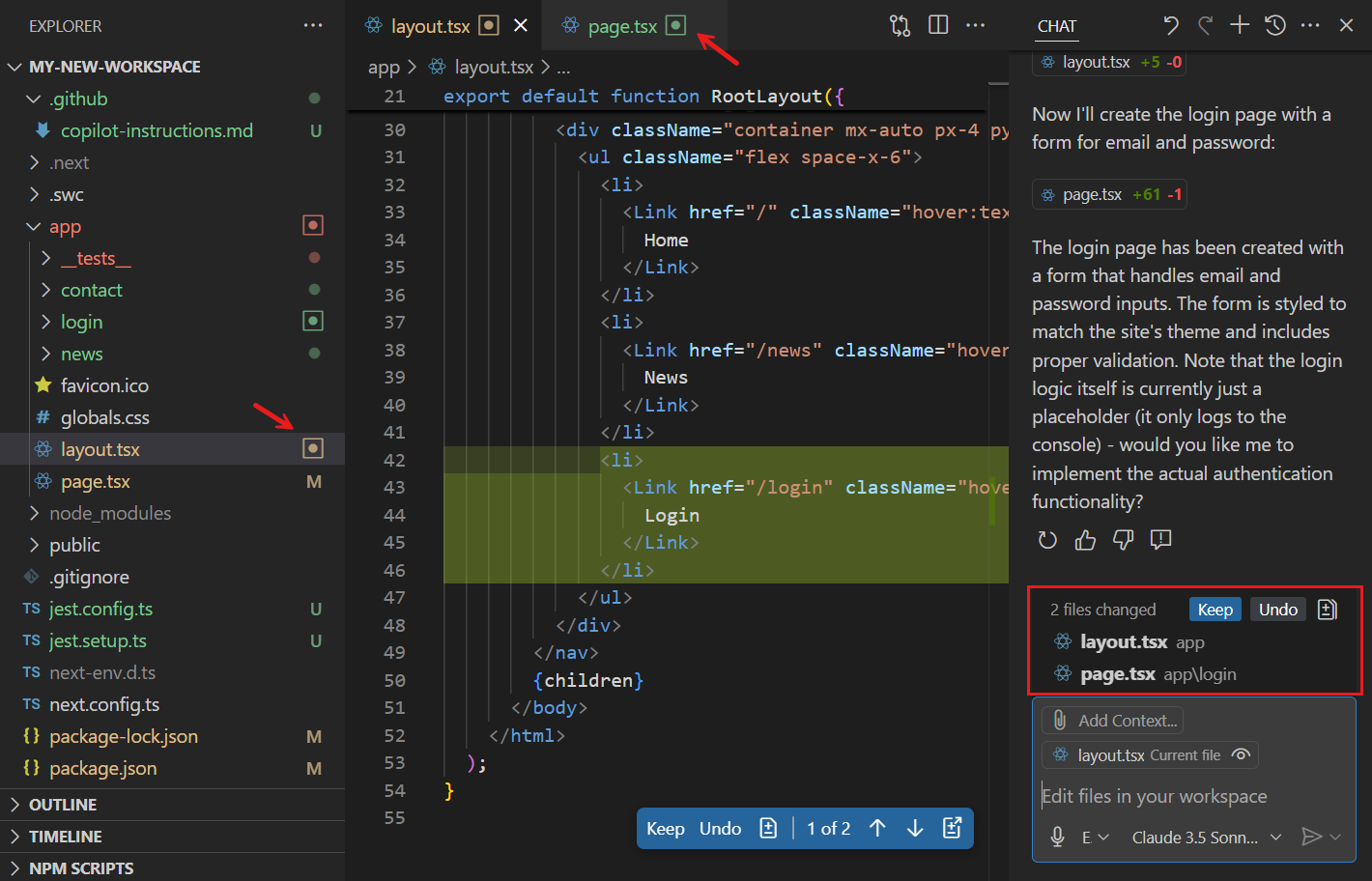

Edit mode gives you a chance to accept/discard edits (with a setting for auto-accepting edits after T time). Can also accept/discard edits on a per-file basis. Credits: code.visualstudio.com.

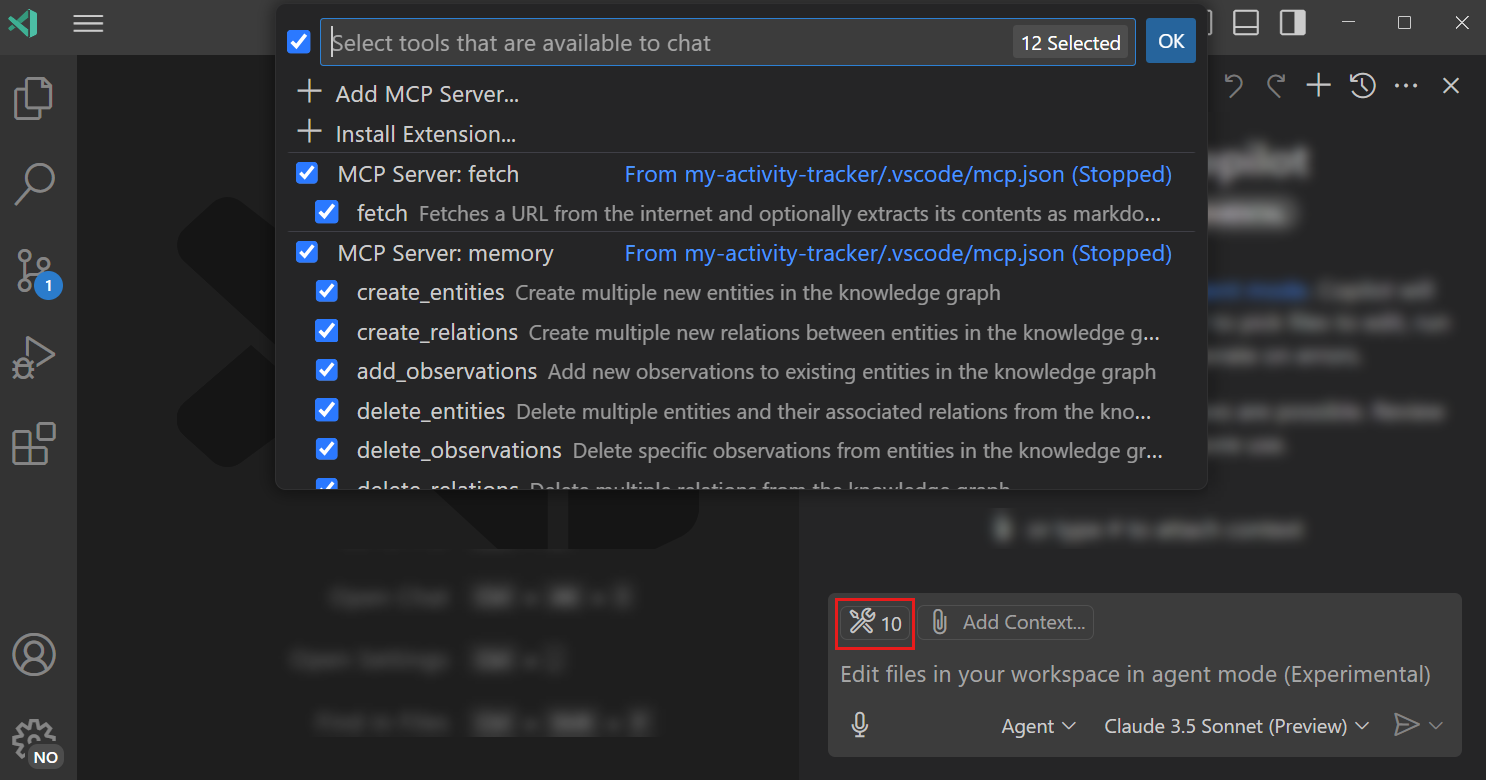

Agent mode allows adding tools that can be used to satisfy the query. Before tool invocation, one needs to approve (there is a setting to auto-approve a certain tool indefinitely, or for the current session, or only for remote environments). The accept/discard UI is similar to that of Edit mode. Credits: code.visualstudio.com.

To ease the verification part, some tools do have --dry-run options to see the

blast area before committing to a course of action. For example,

site:git-scm.com

dry-run

surfaces commands like commit, push, add, fetch, clean, etc., which

support --dry-run variations.

This seems like something that the LLM should be able to do, e.g., first perform

the --dry-run variant and then prompt for consent for the effectual variant.

It does run counter to the “let the agent do its thing and then come back with

results” UX because one needs to babysit the LLM.

Choosing between edit mode and agent mode?

- Edit mode assumes that only code changes are needed and you know the precise scope. Agent mode autonomously determines relevant context, and invokes tools and terminal commands.

- Agent mode might take longer as it involves multiple steps. Agent mode might cost more because of multiple requests to the backend.

- Agent mode is self-healing because it evaluates the outcome and iterates on the results, e.g., running tests after a code edit.

Adding Context to Chat

Use # to provide context to your prompt, e.g.,

#changes: get the diffs of changed files in source control.#codebase: perform a codebase search across the current workspace.#fetch: get the contents of a web page.#githubRepo: perform a web search within a Github repo.#problems: get the list of problems for the current workspace.#searchResults: get the results from the Search view.#terminalLastCommand: get the last run terminal command and its status.#testFailure: get the list of test failures.#usages: get symbol references across the workspace.

… with sample usage:

- Add a new API route for updating the address info

#codebase - Add a login button and style it based on

#styles.css(files as context) - How do I use the

useStatehook in React 18?#fetch https://18.react.dev/reference/react/useState#usage - Where is

#getUserused?#usages(symbol in an open file as context) - How does routing work in next.js?

#githubRepo vercel/next.js

When attaching a file, the full contents of the file are included if possible. If that’s too large to fit into the context window, then an outline with functions and their descriptions will be included. If the outline is also too large, then the file won’t be part of the prompt.

In the age of LLMs, one may bias towards smaller, more modular files. It’s still a tradeoff though. Too many small files, and seeing the big picture is a pain.

Chat supports vision capabilities, e.g., attaching an sketch of a UI and ask agent mode to implement it.

The inbuilt simple browser allows opening webpages in VS Code. From it, one can send selected elements, CSS, and images to the chat.

Making Chat an Expert in Your Workspace

@workspace is a chat participant that takes control of the user prompt, and

uses the codebase to provide an answer. It can only be used in ask mode and

can’t invoke other tools. Example, @workspace Which API routes depend on this service? .

Other chat participants include @vscode, @terminal, and @github.

Extensions can also contribute chat participants.

Extensions can also provide chat participants, e.g., PostgreSQL, FastAPI Chat, Grug Brained Developer, etc. is an extension publisher that creates chat participants on behalf of companies, e.g., SQLAlchemy, Mongo, Twilio, Vite Chat, etc.

#codebase is

a tool

that

performs code search and adds relevant code as context to the chat prompt and

can invoke other tools. The LLM stays in control. It can be used in all chat

modes. Example: Add unit tests and run them #codebase. #codebase affords

more flexibility than @workspace.

The workspace index contains relevant text files, and excludes files like .tmp

and .out. Also excludes files excluded from Git/VS Code, unless they’re

currently open. Binary files, e.g., images, and PDFs, are excluded.

The index can either be local or remote. Remote indexes are hosted on GitHub and are updated whenever you push changes. For projects with less than 2,500 indexable files, Copilot can build a semantic index stored locally. For projects without remote indices and have more than 2,500 indexable files, Copilot builds a basic index that is stored locally; that said, this index is more limited in the kinds of questions that it can help answer.

For now, information beyond the code is not available, e.g., who contributed to this file? However,

there is a Git MCP server available

.

Smart Actions

These include:

- Generating commit messages and PR descriptions

- Refactors like renaming symbols

- Generating alt text for images in Markdown

- Generating doc strings

Copilot > Explainfor pieces of codeCopilot > Fixfor source file and terminal errors/fixTestFailurecommand (useful in agent mode too)Copilot > Review and Commentfor code selectionCopilot Code Reviewin the GitHub Pull Requests extension- Semantic search results in the Search View.

For tasks like renaming symbols, what’s the advantage of bringing an LLM (and thus non-determinism) into the mix? Isn’t this a solved problem already?

Not sold on the commit messages and PR descriptions. The LLM can at best provide a summary of what changed, but not why. That said, it’s an improvement from commit messages like “more fixes”. The LLM-generated commit messages should be a springboard for the developer to add the why behind the what.

Customizing Copilot

Reusable Instructions and Prompts

Instruction files are a shorthand for automatically including the contained

context in every applicable chat query. .github/copilot-instructions.md is

automatically included in every chat request.

.github/instructions/**/*.instructions.md can have an applyTo front matter

for where it’s automatically applied (e.g., a specific folder, ** for global

application, etc.).

There are specific github.copilot.chat.*.instructions settings for various

scenarios, e.g., codeGeneration, testGeneration, reviewSelection,

commitMessageGeneration, and pullRequestDescriptionGeneration. These

settings can reference other *.instructions.md files, in addition to

supporting text entries.

Sample .github/instructions/typescript-react.instructions.md

---

applyTo: "**/*.ts,**/*.tsx"

---

# Project coding standards for TypeScript and React

Apply the [general coding guidelines](./general-coding.instructions.md) to all code.

## TypeScript Guidelines

- Use TypeScript for all new code

- Follow functional programming principles where possible

- Use interfaces for data structures and type definitions

- Prefer immutable data (const, readonly)

- Use optional chaining (?.) and nullish coalescing (??) operators

## React Guidelines

- Use functional components with hooks

- Follow the React hooks rules (no conditional hooks)

- Use React.FC type for components with children

- Keep components small and focused

- Use CSS modules for component styling

*instructions.md files look useful for instructions that aren’t well-defined

for a deterministic linter to apply. For example, Follow functional programming principles where possible seems tricky to implement static rules for. However,

Use camelCase for variables, functions, and methods is a solved problem for linters, and so using an LLM is a sub-optimal choice. But maybe the LLM is easy

to configure and maintain, and catches most of the issues so ¯\_(ツ)_/¯

Unlike .instructions.md files that supplement your existing prompts,

.prompt.md files are standalone prompts for common tasks. By default, they

run in agent mode and can utilize tools specified in the front matter. They

have access to variables using the ${variableName} syntax.

Sample create-react-form.prompt.md

---

mode: 'agent'

tools: ['githubRepo', 'codebase']

description: 'Generate a new React form component'

---

Your goal is to generate a new React form component based on the templates in #githubRepo contoso/react-templates.

Ask for the form name if ${input:formName} is not provided. Ask for the fields

if not provided.

Requirements for the form:

* Use form design system components: [design-system/Form.md](../docs/design-system/Form.md)

* Use `react-hook-form` for form state management:

* Always define TypeScript types for your form data

* Prefer *uncontrolled* components using register

* Use `defaultValues` to prevent unnecessary rerenders

* Use `yup` for validation:

* Create reusable validation schemas in separate files

* Use TypeScript types to ensure type safety

* Customize UX-friendly validation rules

Usage in chat: /create-react-form: formName=MyForm

instructions.md and prompt.md files can be saved in the user data folder and

synced across VS Code instances. One could also check them into version control

them to share them within the teams.

Choice of Language Models

Some models are good at fast coding, e.g., GPT-4o, Claude Sonnet 3.7,

Gemini 2.0 Flash, while others are good at reasoning/planning, e.g., Claude Sonnet 3.7 Thinking, o1, o3-mini.

One can also provide a valid API key to use models directly from providers like Anthropic, Ollama, etc. This allows for experimenting with models that are not yet built-in, bypassing standard rate limits, etc. However, these models only apply to the chat experience, and not other features like commit-message generation, repository indexing, intent detection, side queries, etc. Responsible AI filtering is not applied when using your own model either.

Add Tools for Agentic Modes

Copilot can also connect MCP-compatible servers to agentic workflows, e.g., GitHub’s MCP server offers tools to list repositories, create PRs, manage issues, etc.

#codebase searches your codebase and fetches files that are relevant to your

question, e.g., Explain how authentication works in #codebase.

#codebase can be a litmus test. For things that you already know how to do

within the codebase, how well does #codebase collect the context?

For large repositories, file_search can be slow. Chromium instructs Copilot to

use Haystack search instead of file_search.

#githubRepo performs a code search within a GitHub repository, e.g., Add unit tests for my app. Use the same test setup and structure as #githubRepo rust-lang/rust.

How to handle cases where the context lives outside of the repository? For example, what if the context is in Azure Dev Ops, or even in an Azure Dev Ops wiki? Is there a tool to fetch Azure Dev Ops resources from Github Copilot?

#fetch fetches content from a web page, e.g., summarize #fetch https://code.visualstudio.com/docs/copilot/chat/copilot-chat-context.

How does #fetch deal with pages that require authentication? I can see how

#githubRepo would be useful for private repositories because I’m signed into a

GitHub profile, but #fetch applies to more than private Github pages.

Model Context Protocol (MCP) Support

Model Context Protocol (MCP) provides a standard way for applications to share contextual information with LLMs, expose tools and capabilities to LLMs, and build composable workflows. An MCP server offers resources (context and data), prompts (templated messages and workflows), and tools (functions for the AI model to execute).

showcases some servers, e.g., Filesystem for secure

file access with access controls; Fetch for web content fetching and

LLM-optimized conversion; Memory for knowledge graph-based persistent memory

system; Sequential Thinking for dynamic and reflective problem-solving through

thought sequences; Git to read, search, and manipulate Git repositories.

Of the 3 MCP server primitives (resources, prompts, and tools), Copilot only

supports adding tools. MCP tools can be added in a workspace via

.vscode/mcp.json or in your settings.json.

Sample MCP server configs: Perplexity, Git, Sequential Thinking

{

// 💡 Inputs are prompted on first server start, then stored securely by VS Code.

"inputs": [

{

"type": "promptString",

"id": "perplexity-key",

"description": "Perplexity API Key",

"password": true

}

],

"servers": {

// https://github.com/ppl-ai/modelcontextprotocol/

"Perplexity": {

"type": "stdio",

"command": "npx",

"args": ["-y", "server-perplexity-ask"],

"env": {

"PERPLEXITY_API_KEY": "${input:perplexity-key}"

}

},

"git": {

"command": "uvx",

"args": ["mcp-server-git"]

},

"sequential-thinking": {

"command": "npx",

"args": [

"-y",

"@modelcontextprotocol/server-sequential-thinking"

]

}

}

}

References

- GitHub Copilot in VS Code. code.visualstudio.com . Accessed Jun 1, 2025.

- Code completions with GitHub Copilot in VS Code. code.visualstudio.com . Accessed Jun 1, 2025.

- Use chat in VS Code. code.visualstudio.com . Accessed Jun 1, 2025.

- Manage context for AI. code.visualstudio.com . Accessed Jun 1, 2025.

- haystack.instructions.md - Chromium Code Search. source.chromium.org . Accessed Jun 2, 2025.

- Making chat an expert in your workspace. code.visualstudio.com . Accessed Jun 3, 2025.

- Search results - tag:chat-participant | Visual Studio Code , Visual Studio Marketplace. marketplace.visualstudio.com . Accessed Jun 3, 2025.

- Layer: Build great MCP Servers for your API. www.buildwithlayer.com . Accessed Jun 3, 2025.

- Use edit mode in VS Code. code.visualstudio.com . Accessed Jun 3, 2025.

- GitHub Copilot in VS Code settings reference. code.visualstudio.com . Accessed Jun 3, 2025.

- Use agent mode in VS Code. code.visualstudio.com . Accessed Jun 3, 2025.

- Use MCP servers in VS Code (Preview). code.visualstudio.com . Accessed Jun 3, 2025.

- GitHub - modelcontextprotocol/servers: Model Context Protocol Servers. github.com . Accessed Jun 3, 2025.

- Specification - Model Context Protocol. modelcontextprotocol.io . Mar 26, 2025. Accessed Jun 3, 2025.

- Prompt engineering for Copilot Chat. code.visualstudio.com . Accessed Jun 3, 2025.

- Terminology evolution? 'completion' vs 'response' - Community - OpenAI Developer Community. community.openai.com . May 14, 2025. Accessed Jun 8, 2025.

- How to work with the Chat Markup Language (preview) - Azure OpenAI | Microsoft Learn. learn.microsoft.com . Accessed Jun 8, 2025.

- Copilot smart actions in Visual Studio Code. code.visualstudio.com . Accessed Jun 8, 2025.

- Customize chat responses in VS Code. code.visualstudio.com . Accessed Jun 8, 2025.

- AI language models in VS Code. code.visualstudio.com . Accessed Jun 8, 2025.

My work is primarily in Microsoft’s ecosystem, so learning Copilot usage in VS Code is pretty important. If not for my own productivity gains, then for having knowledgeable conversations with coworkers about using LLMs as a SWE.