Journals

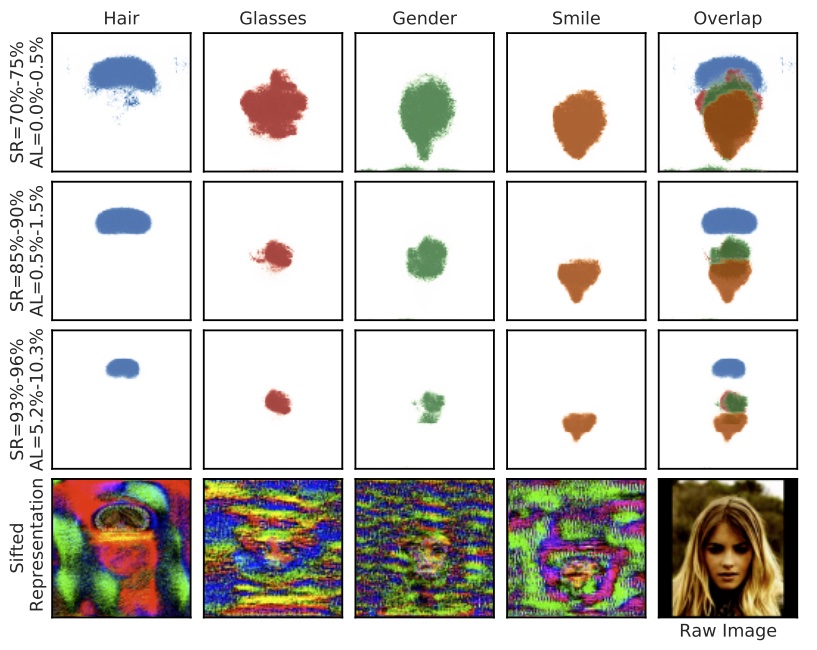

note that prediction services can still make accurate predictions using a fraction of the data collected from a user device. They propose Cloak, which suppresses non-pertinent features (i.e. those features which can consistently tolerate addition of noise without degrading utility) to the prediction task. Cloak has a provable degree of privacy, and unlike cryptographic techniques, does not degrade prediction latency. Using the training data, labels, a pre-trained model and a privacy-utility knob, they (1) find the pertinent features through perturbation training, and (2) learn utility-preserving constant values for suppressing the non-pertinent data. During the inference phase, they efficiently compute a sifted representation which is sent to the service provider.

go through the trouble of learning utility-preserving constants because (1) simply adding noise could still leak data and/or push the values out of the domain of the classifier, and (2) zero-ing out the non-pertinent might degrade accuracy because the zeros might not match the distribution of the data that the classifier expects.

References

- Not All Features Are Equal: Discovering Essential Features for Preserving Prediction Privacy. Mireshghallah, Fatemehsadat; Taram, Mohammadkazem; Jalali, Ali; Elthakeb, Ahmed Taha Taha; Tullsen, Dean; Esmaeilzadeh, Hadi. The Web Conference, 2021. University of California, San Diego; Amazon; Samsung Electronics. doi.org . scholar.google.com . 2021.

- Perturbation Theory. en.wikipedia.org . Accessed Oct 11, 2021.

- Perturbation Theory in Deep Neural Network (DNN) Training. Prem Prakash. towardsdatascience.com . Mar 20, 2020. Accessed Oct 11, 2021.

- Adversarial Machine Learning. en.wikipedia.org . Accessed Oct 11, 2021.

Perturbation Theory comprises methods for finding an approximate solution to a problem, by starting from the exact solution of a related, simpler problem, i.e. \(A = A_0 + \epsilon^1 A_1 + \epsilon^2 A_2 + …\), where \(A\) is the full solution, \(A_0\) is the known solution to the exactly solvable initial problem, and \(A_1, A_2, …\) represent the first-order, second-order and higher-order terms.

In deep neural network training, perturbation is used to solve various issues, e.g. perturbing gradients to tackle the vanishing gradient problem; perturbing weights to escape the saddle point; perturbing inputs to defend against malicious attacks.

features cases of researchers perturbing inputs to fool ML systems, e.g. perturbing the appearance of a stop sign such that an autonomous vehicle classified it as a merge or speed limit sign.